AI: The future of food creative?

Like many creatives, I have literally been obsessed with AI visual creation platforms. Both MidJourney and DALL·E 2 are almost permanent fixtures on my browser tabs, and my bookmarks are overflowing with links to communities, Githubs, and galleries. But is this another flash in the pan, or is it really going to influence the way we handle creative ideation in the future?

About this post

Published

Author

October 19, 2022

Maite Gonzalez

First off – fear not my fellow creatives, this is not an answer to ‘Creative-less Campaigns’ nor is it the answer to every creative brief either.

It’s not designed to replace the ideation process, or remove the need for illustrations, styleframes, moodboards, or storyboards. It’s genuinely amazing though; its flexible, its really interesting, and it’s extremely entertaining – but it’s just a part of the process – so it’s an evolution to how we currently work.

With something this new the options and opportunities are literally endless and when you fall down the rabbit hole of all creating together on Discord, it’s very easy to get epically distracted. So, my first job is to focus this conversation, towards – “How this could help Powerhouse use AI creative to support our core specialism – FMCG?”

And more precisely how can AI ideation be used in the food sector?

Now, I’m not just talking about artistic impressionism here, the mixing and moulding of artistic interpretation for Arts’ sake is amazing, but we are talking about commercial creative intent – aligned around a clients’ needs. But it’s interesting to see how AI interprets the visual content we produce and post online.

Experiments like the This Food Does Not Exist using sub diffusion models of DALL·E 2 to accurately recreate known digital food photography, and the more creative approaches such as WeirdAlChef by the Data Scientists at Buzzfeed, show the intent and potential appetite for a considered understanding of AI food creative.

How do we integrate Visual AI into our creative team?

Our creative world has evolved how we do things, as modern creatives we’re comfortable with pen and paper (or procreate and an Apple pencil). We’re also happy filtering through enormous amounts of consumer data and insights, industry opportunities, Behance, Dribbble, Stock sites, and social channels galore as a starting point. So, now we’re adding AI creative to this list too!.

Essentially, it’s like covering a canvas in a block colour before starting painting anything. I think it’s a procrastination killer, a starting point, or a visual polling of the internet – before we jump into scripting or drawing anything.

What’s our creative approach to food;

If you ask our Chefs – they would tell you it’s in the taste, the nutrition, the ingredients, and how we convey this together in the dining experience.

If you ask our Food Stylists – they would talk about artfully enhancing the food to appear as photogenic as possible. How the visual works alongside the set dressing and understanding the needs of the audience and their product.

If you ask our Creatives – they would talk about narrative, imagination, colour, lighting, and composition.

If you ask me; there’s opportunity for all of these creative endeavours to be influenced by AI visual creative.

Using machine learning to visually scour the internet for relationships between; ingredients, taste combinations, and even brand styles and pairings – and deliver scamps back in visually provoking and exciting ways.

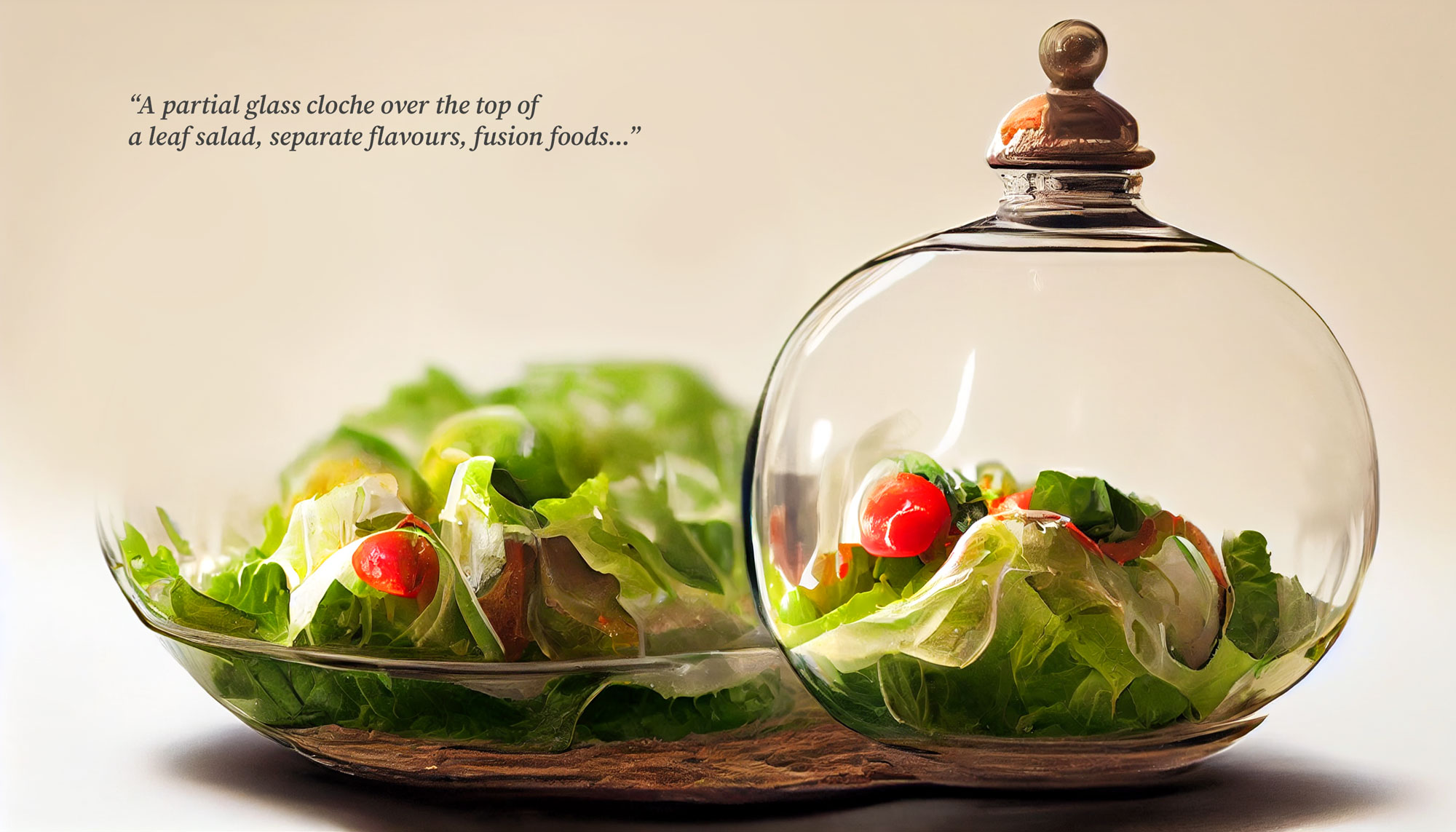

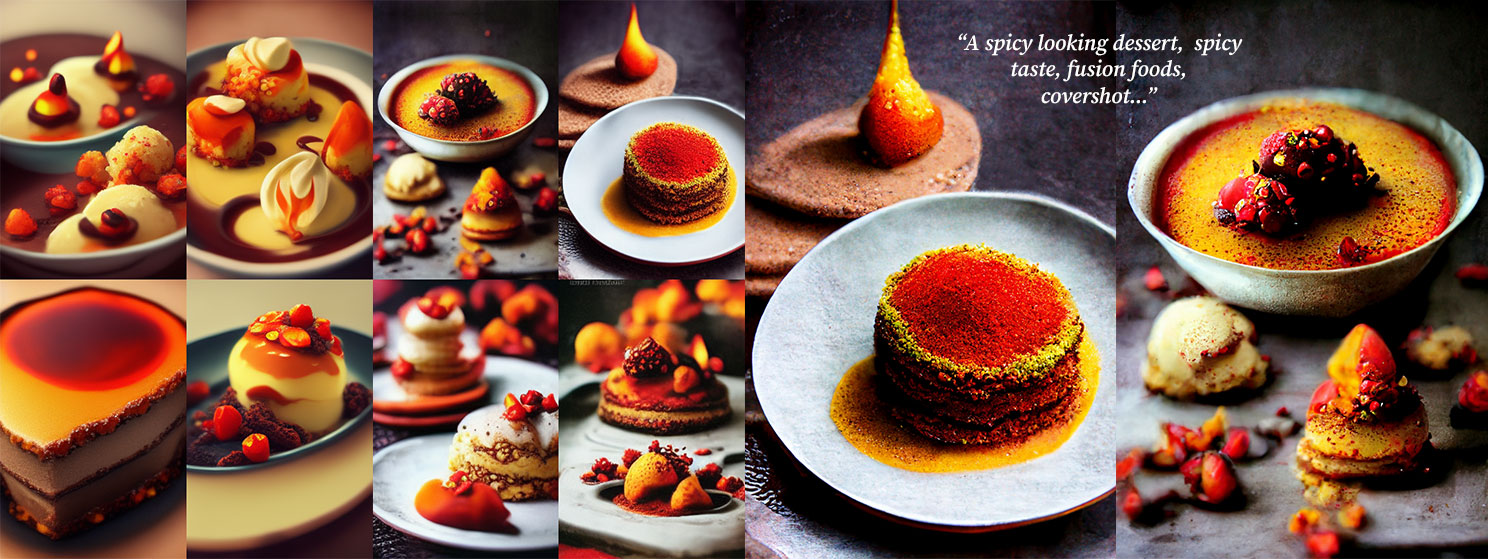

Imagine being able to rapidly prototype innovative plating ideas, compare cuisines, ingredient pairings, or visual experiments in food without needing to turn a hob on or cook anything?

Imagine being able to explore colour, composition, and physical framing of multiple components within seconds?

Imagine being able to reflect occasions, cultural fusions, and context of dishes and cuisines without ever leaving the office?

Imagine the ability to crowdsource how people pair food products, not based on their words, or their verbal descriptions, but through their pictures. What side dish most commonly accompanies sausages in the UK on a weeknight dinner? Why?

We can take this a little further too – we can use AI Creative to help us convey ‘flavour and taste’ through visual iconography? What does ‘spicy’ look like? And how could this influence the visual representation of that dish?

Could we dress, style, or garnish a dish to convey a flavour profile – without needing to visually show the ingredients?

And could we use this to positively influence nutritional behavioural change by changing how certain food groups are typically visually represented? I think we are only teasing the surface of how we can (and will) use this to influence and augment our creative process, but we are excited to see how this develops…

But where’s the skill in using MidJourney or Dall-E 2 in generating creative?

Well, this is all driven by what you input. The text, the syntax, the order, and flow of the language defines the way the images are returned.

It’s like learning to speak all over again, you start with 1- and 2-word queries – slowly building out your targeted repertoire of more fruitful prompts.

[descriptive request increasing and iterating this section to include ‘with’ and ‘including’ terms] + visual stylist references + rendering references + direct instruction to MidJourney (such as scale, quality, aspect ratio, and stylistic license).

Its successful use depends on how you ‘engineer your prompts’. Since the data is coming from the way the internet is structured, what if we used Keyword analysis to feed our vocabulary?

And even when the results are wild, we’re pattern-hunting people, always searching for meaning in visuals, so when we see bonkers ideas or crazy interpretations, this is just our prompt to consider “what if’s?”

It’s like the best ideation session ever. There are no ideas are bad ideas, right?